NanoGPT-inference

LLM inference from scratch

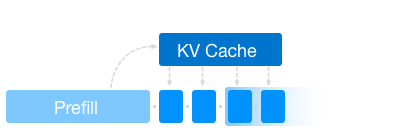

LLM inference, the engineering behind serving LLMs efficiently and economically, is becoming increasingly important. In this post, I'll show you how to speed up LLM inference with various techniques. I also release the code of each inference engine as a simple extension to Karpathy's NanoGPT.

Deploying DeepSeek R1 'locally'

A Practical Guide with Ray and vLLM

This guide demonstrates how to deploy DeepSeek R1 651B in fp8 across multiple H100 nodes using Ray and vLLM.

European Tweeties

Creating language models for all 24 EU languages

Existing language models are either focused on English or multilingual, with reasonable performance in the bigger languages. However, many languages are not supported or perform a lot worse than when prompted in English. To address this, we are creating 23 new language models for all EU languages.

Setting up a decent SentencePiece tokenizer

Reasonable monolingual tokenization from noisy data

Tokens are what makes language models understand language. Each sentence gets split into tokens and then converted to embeddings. So we want good tokens that cover as much of a language's words as possible, with our limited vocabulary. Luckily there are many libraries, like SentencePiece. However, the configuration is not trivial to get decent results on noisy data.

Dutch Chat Toolkit

Creating retrieval-augmented chatbots

A lot of NLP technologies are easy to use for beginners, but creating and deploying a chatbot is still a bit tricky. Let's make a Python CLI toolkit to quickly create a chatbot with a web-based user interface.

Building a language learning app

Day 3: Prompting and basic UI

With the current state of transformer models for text and speech, I believe that there is an opportunity to make fully immersive language learning apps that can tailor their content to what the user wants to learn. In this series, I try to work out a demo using different NLP technologies.

Building a language learning app

Day 2: setting up the app

With the current state of transformer models for text and speech, I believe that there is an opportunity to make fully immersive language learning apps that can tailor their content to what the user wants to learn. In this series, I try to work out a demo using different NLP technologies.

Building a language learning app

Day 1: planning

With the current state of transformer models for text and speech, I believe that there is an opportunity to make fully immersive language learning apps that can tailor their content to what the user wants to learn. In this series, I try to work out a demo using different NLP technologies.

Migrating from HuggingFace AdamW

Drop-in replacement optimizer with learning schedule

The AdamW implementation from HuggingFace is deprecated and can even lead to errors. This short blog post suggests a drop-in replacement.

Updating RobBERT (part 2)

Bringing a language model to 2022

In this second blogpost on updating RobBERT, I discuss the training and analyse how well the model performs for old benchmarks and new tasks.

Updating RobBERT

Bringing a language model to 2022

A few things happened since our Dutch language model RobBERT was trained in 2019. In this blogpost, I explore how to update RobBERT efficiently to include these new words and shifting word usages.